Encoding Craft: Making Your Standards AI-Readable

In my previous article about coaching teams to leverage AI effectively in their work, I introduced three principles: Aim then accelerate; Build a pattern library; and Instrument your guardrails. The response was immediate: “This makes sense — but how do we actually make our patterns and standards work with AI?”

That’s the question this article answers. And it’s the key unlock that many organizations are missing.

Here’s what I’m seeing: traditional companies grow in direct proportion to headcount and resources. You need more people to do more work. But AI-first organizations scale through compounding effects — reusable agents, codified intelligence, and AI-augmented teams. A 10-person team can now outperform a 100-person organization. This isn’t a productivity gain. It’s a new scale paradigm.

The difference isn’t just about using AI tools. It’s about building your Flywheel — the systems that convert your taste and judgment calls (your Compass) into repeatable excellence at speed and scale. And the Flywheel only works when your standards and patterns are encoded in a format AI can reference and apply consistently across teams and functions.

Many teams are building pattern libraries and quality standards for human reference. That’s useful, but it misses the real leverage opportunity: if you structure these assets to be AI-readable, every team member benefits from institutional knowledge encoded directly into their workflow. Your best practices become reusable workflows and agents that maintain quality while multiplying velocity. This is how small teams outperform large organizations.

Here’s how to do it.

The Core Insight: Context Is Everything

In my experience working with teams that adopt AI tools, I’ve learned that when you provide an LLM with the exact right context, you achieve dramatically higher quality output. LLMs benefit from more context, but only if it’s relevant to the task at hand.

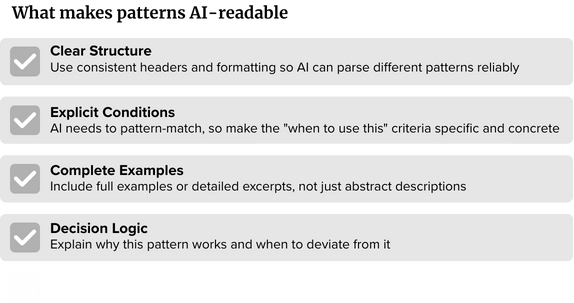

The key is structuring that context so AI can parse and apply it effectively. Random notes scattered across documents won’t work. However, well-structured context files — such as PATTERNS.md or STANDARDS.md — become force multipliers that scale across your entire organization.

This is how you build the Flywheel. When your patterns and standards are AI-readable, they become reusable workflows that any team member can invoke. Your institutional knowledge compounds: the sales team’s briefing patterns help marketing build campaigns. The compliance team’s guardrails prevent issues from reaching the legal review stage. One senior person’s judgment gets encoded and applied across dozens of projects simultaneously.

The result: quality and velocity both improve, without adding headcount. Your organization scales through codified intelligence, not just through hiring.

Making Pattern Libraries AI-Readable

The Opportunity Most Teams Miss

You’ve documented your best practices. You’ve identified the patterns that work. But if those patterns live in a PDF buried in SharePoint or in someone’s head, they’re not accessible when your team is prompting AI.

The solution: create structured pattern files that both humans and AI can reference.

Example: PATTERNS.md Structure

Here’s what an AI-readable pattern library looks like, using the B2B sales team example from my previous article:

# Executive Briefing Patterns ## Pattern 1: The Confidence Builder **Use when:** - Audience: Non-technical business sponsor - Stage: Active evaluation (not early exploration) - Setting: Scheduled customer experience center visit (20-min slot) - Need: Prospect must build internal business case for investment **Structure:** 1. Opening (2 min): State their business challenge in their language - Use specific pain points from discovery - Avoid product terminology 2. Business Impact (5 min): Why this challenge is costly now - Quantify current state costs (inefficiency, risk, opportunity cost) - Reference industry benchmarks or peer examples 3. Social Proof (4 min): How companies like theirs solved it - Name 2-3 similar customers with specific results - Focus on business outcomes, not technical implementation 4. Our Approach (6 min): Solution overview with minimal technical depth - High-level architecture or methodology - Differentiation from alternatives - Why it de-risks their initiative 5. Path Forward (3 min): Clear pilot-to-scale progression - Specific timeline and milestones - What resources they'll need to commit - Next concrete step **Example:** [See /examples/confidence-builder-financial-services-march2024.pdf] **Why this works:** Business sponsors need ammunition for internal champions. Technical depth triggers "I need to bring my technical team." Business impact + peer validation gives them what they need to sell internally. **When to deviate:** - If prospect is technical buyer → use Pattern 2 (Technical Deep Dive) - If early exploration stage → use Pattern 4 (Vision Alignment) - If trade show drive-by (5-min) → use Pattern 5 (Quick Hook) ## Pattern 2: Technical Deep Dive [Continue with next pattern...]

How Your Team Uses This

When preparing a briefing, they prompt your preferred LLM:

I'm preparing an executive briefing using Pattern 1 (Confidence Builder) from our PATTERNS.md file. Here's the prospect context: - Company: [name], financial services, $2B revenue - Challenge: Manual compliance processes creating risk exposure - Attendees: VP Operations (business sponsor), Director of Compliance - Setting: Our CX center, 20-minute slot - Discovery notes: [paste key insights] Draft the briefing structure following Pattern 1, customizing for this context.

The AI applies the pattern scaffolding while customizing content to the specific prospect. Your team spends time on strategy rather than structure.

The Compounding Benefit

As you add patterns, your AI context gets smarter. New team members onboard faster — they inherit institutional knowledge through the pattern library. AI consistently applies best practices without your team having to remember every nuance.

One sales team I work with has 12 patterns in their library. Their ramp time for new AEs dropped from 3 months to 6 weeks because new hires could reference patterns + AI to produce quality briefings while they were still learning the product.

However, the real breakthrough came when they realized these patterns could be applied across various functions. Marketing adapted the “Confidence Builder” pattern for case studies. Customer Success used the “Technical Deep Dive” pattern for executive business reviews. One senior sales leader’s accumulated wisdom was now being applied across three departments without that person being in the room.

This is the compounding effect: codified intelligence that multiplies impact without multiplying headcount.

Tips for Building Your Pattern Library

Start small: Don’t try to document everything. Start with 3–5 patterns that cover your most common scenarios.

Use consistent formatting: Pick a structure (Conditions → Solution → Rationale → Example → Deviations) and stick to it. Consistency helps AI parse and apply patterns reliably.

Include real examples: Sanitized versions of actual successful work. AI learns from examples more effectively than from abstract descriptions.

Update regularly: When you discover a new pattern or refine an existing one, update the file accordingly. Treat it as living documentation, not a one-time exercise.

Store it where AI can access it: In your project repository, your team’s shared workspace, or uploaded as context when prompting. The easier it is to reference, the more it gets used.

Encoding Guardrails as AI Instructions

The Leverage in Automated Quality Checks

Once you’ve built your rubrics and golden sets for quality standards, encode them into a format AI can check against automatically. This is where the real time savings happen — and where your team’s judgment muscles get strengthened through immediate feedback.

The Key Principle

AI excels at evaluating objective criteria against explicit standards. It’s terrible at inferring what you care about. Be specific, provide examples, and structure your guardrails so AI knows exactly what to look for.

Example: Healthcare Marketing Compliance Standards

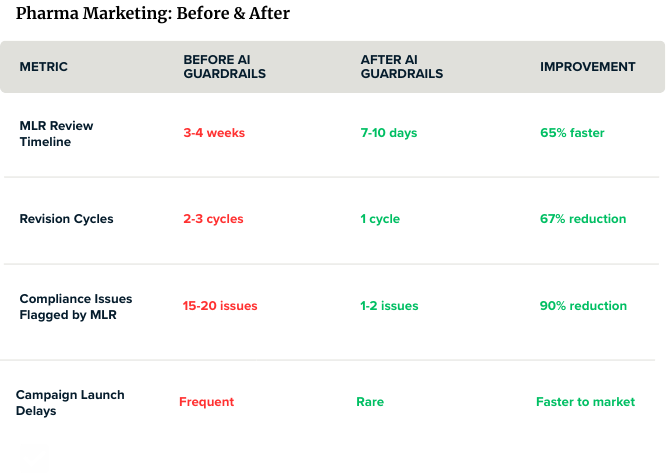

Let me share a real-world example from my work with a global pharmaceutical company. They were producing marketing assets across multiple brands and regions, all of which required MLR (Medical, Legal, Regulatory) review before publication.

The challenge: MLR reviews were taking 3–4 weeks per asset, with 2–3 revision cycles as reviewers identified compliance issues such as unsubstantiated claims, missing safety information, or prohibited language.

We built an AI-readable standards file that marketing teams could check against before MLR submission:

# Healthcare Marketing Compliance Standards

## Brand Claims - Product X (Diabetes Therapy)

**Approved claims (with substantiation):**

- "Reduces A1C by up to 1.5%" [Source: Phase 3 Trial XYZ-2023, n=1,247]

- "Once-daily dosing" [Source: Product Label, FDA-approved]

- "Demonstrated cardiovascular safety" [Source: CVOT Study ABC-2022]

**Prohibited claims:**

- Comparative language ("better than," "superior to") without head-to-head data

- Absolute language ("eliminates," "cures," "prevents")

- Off-label indications or patient populations

- Quality-of-life claims without validated instrument data

**Required context for every efficacy claim:**

- Patient population studied

- Study design and sample size

- Safety information within 2 paragraphs of efficacy claim

- Cardiovascular safety data must accompany A1C reduction claims

## Safety Information Requirements

**Product X is Schedule IV controlled substance - required disclaimers:**

**Patient brochures:**

- Full prescribing information URL

- Boxed warning summary (verbatim):

"WARNING: RISK OF SERIOUS HYPOGLYCEMIA

Product X can cause severe hypoglycemia. Patients with renal impairment,

elderly patients, and those taking certain medications are at increased

risk. Monitor blood glucose and adjust dosage accordingly."

**Physician detail aids:**

- ISI (Important Safety Information) on every page with efficacy data

**Digital ads:**

- Abbreviated ISI + "Click here for full prescribing information"

## Prohibited Content

**Images that imply:**

- Off-label use (children, pregnant women unless indicated)

- Lifestyle improvements not substantiated in trials

- Activities requiring medical supervision without disclaimer

**Language to avoid:**

- Superlatives: "miracle," "breakthrough," "revolutionary"

- Comparative claims without data: "best," "most effective," "fastest-acting"

- Guarantees: "will reduce," "eliminates," "prevents all"

- Patient testimonials (unless part of approved campaign with disclaimers)

## Regional Variations

**United States (FDA):**

- Fair balance required: risks equal prominence to benefits

- ISI required on all promotional materials

- No reminder ads without full PI link

**European Union (EMA):**

- Direct-to-consumer advertising prohibited for prescription meds

- HCP materials must include Summary of Product Characteristics

- Stricter adverse event reporting requirements

**Asia-Pacific:**

- Japan: All materials require PMDA pre-approval

- China: NMPA prohibits certain comparative claims

- Australia: TGA requires prominent "Seek medical advice"

## Substantiation Requirements

Every claim must link to:

1. Approved source (clinical trial, product label, peer-reviewed publication)

2. Specific data point (result, confidence interval, p-value if applicable)

3. Patient population and study context

**Properly substantiated claim:**

"Product X reduced A1C by 1.5% (95% CI: 1.2-1.8%, p<0.001) in adults

with type 2 diabetes inadequately controlled on metformin alone

(n=1,247, Phase 3 Trial XYZ-2023)"

**Unsubstantiated claim (FLAG):**

"Product X significantly improves blood sugar control"

[Missing: specific data, patient population, study reference]

## Severity Levels

**Critical (Must fix before MLR):**

- Unsubstantiated efficacy claims

- Missing required safety information

- Prohibited comparative language without data

- Off-label implications in imagery or copy

- Missing required disclaimers

**Important (Fix unless justified):**

- Incomplete substantiation (missing CI, p-value, study name)

- Safety information not proximate to efficacy claims

- Regional compliance variations not addressed

- Ambiguous language implying unsupported benefits

**Consider (MLR judgment call):**

- Tone that might overstate benefit

- Image selection that could be misinterpreted

- Language complexity for patient audiences

- Balance between engagement and conservatism

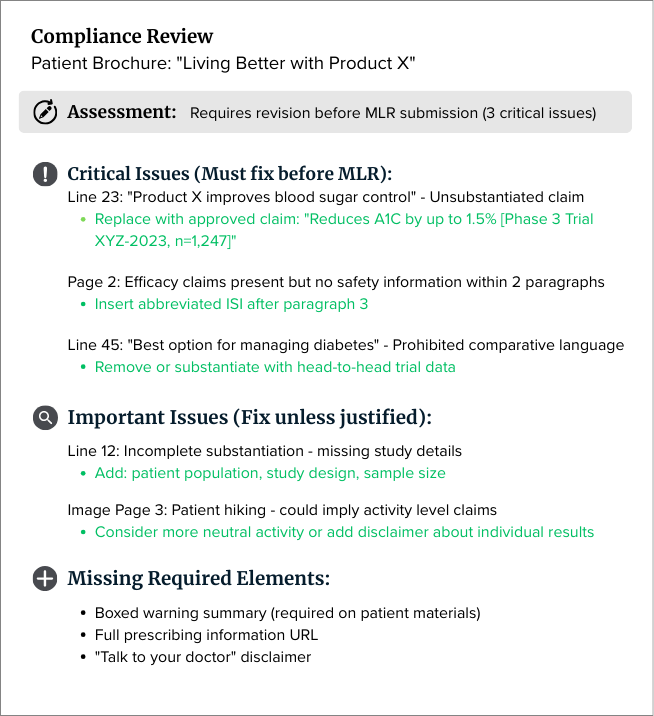

The pharmaceutical company used severity levels extensively because compliance violations have real regulatory consequences:

Critical issues could lead to FDA warning letters or required corrective actions. These must be fixed before MLR submission.

Important issues would likely be flagged by MLR but won’t stop the process — they’re fixable during review.

Consider items are judgment calls that MLR reviewers might debate — tone, image selection, and language complexity.

How Marketing Teams Use This

After drafting patient education materials or promotional content, they run it through an AI compliance check:

Review this patient education brochure for Product X against our HEALTHCARE_COMPLIANCE_STANDARDS.md file. Check for: 1. Brand claims (all substantiated with approved sources?) 2. Safety information (required disclaimers present and properly placed?) 3. Prohibited content (any red-flag language or imagery implications?) 4. Regional compliance (US market - FDA requirements met?) Provide: - List of compliance issues (by severity: critical/important/consider) - Specific suggestions with approved language where applicable - Missing required elements - Overall assessment: ready for MLR or needs revision? [Paste draft here]

The AI returns a structured compliance report:

Marketing fixes flagged issues, verifies with AI again, then submits to MLR. The MLR team receives materials that have passed basic compliance checks, allowing them to focus on medical accuracy, appropriate patient populations, and strategic positioning.

The Results

Quality improvement: Junior copywriters learned compliance requirements faster through immediate feedback. They internalized what “substantiated claim” meant after seeing AI flag unsubstantiated language repeatedly. And more tenured members of the team sometimes learned about changes in regulatory requirements that they had missed when they were communicated in training sessions or memos.

Strategic benefit: Review cycles can focus on judgment calls — such as medical accuracy, therapeutic positioning, and appropriate messaging — rather than catching missing disclaimers.

Risk management: The company moved faster in regulated markets without increasing compliance risk.

The Compounding Advantage

Here’s what I’ve observed across multiple teams that’ve implemented AI-readable standards and applied these practices:

Onboarding accelerates: New team members get institutional knowledge encoded in their workflow from day one. They’re productive faster because they’re not relying on tribal knowledge.

Quality becomes consistent: Everyone has access to the same patterns and standards. Output quality converges upward because the baseline is encoded.

Senior capacity is freed: Experienced team members stop doing QA on objective criteria. They focus on strategic judgment, mentorship, and the truly hard problems.

Learning loops tighten: Immediate feedback from AI checks means people learn standards faster. They don’t wait days or weeks to find out what was wrong.

Iteration accelerates: Teams can test more approaches, faster, because the scaffolding and checks are automated. They explore more creative solutions because the baseline is handled.

Knowledge compounds across functions: Patterns built in one team become reusable workflows for others. Sales patterns inform marketing. Compliance standards prevent legal issues. Design systems scale across product teams. One expert’s judgment multiplies across the organization.

This is how AI-first organizations scale differently. They’re not just working faster — they’re building systems that convert individual expertise into organizational capability. A 10-person team with well-encoded patterns and standards can outperform a 100-person team still relying on tribal knowledge and manual review cycles.

The constraint isn’t talent or tools anymore. It’s whether you’ve codified your craft in a format that scales.

The goal isn’t to replace human judgment — it’s to free your team to focus their judgment on what matters. Encode the repeatable, automate the verifiable, and spend human energy on the strategic choices that actually move the needle.

That’s how you turn generative AI from a faster typewriter into a force multiplier.